2021-04-21

Why Rasa uses Sparse Layers in Transformers

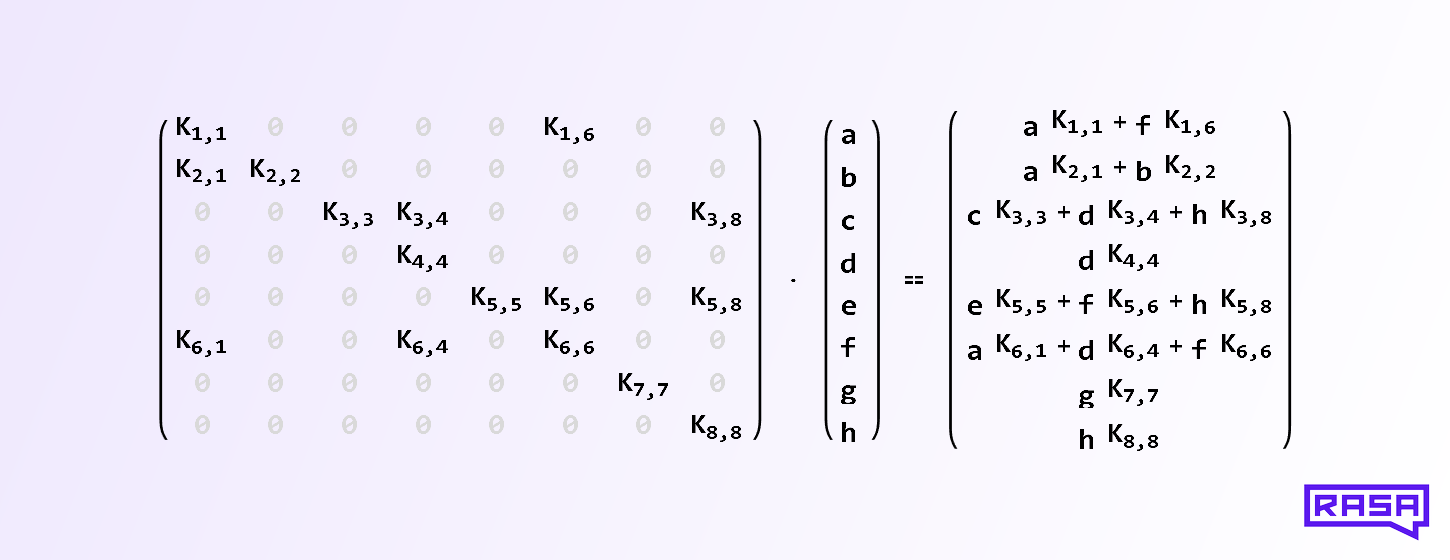

Feed forward neural network layers are typically fully connected, or dense. But do we actually need to connect every input with every output? And if not, which inputs should we connect to which outputs? It turns out that in some of Rasa’s machine learning models we can randomly drop as much as 80% of all connections in feed forward layers throughout training and see their performance unaffected! Here we explore this in more detail.