Perceptron at Initialisation

In this notebook I study deep non-linear networks at initialisation. This is a prerequisite to understand such a network at the end of training, as is discussed in The Principles of Deep Learning Theory (PDLT) by Roberts, Yaida, and Hanin. This notebook also serves as a demonstration of my DeepLearningTheoryTools paclet for Mathematica and is meant to be read as complementary material to the PDLT book.

Paper reading as a Cargo Cult

Some thoughts on why paper reading groups aren’t helping with research (unless you’re specialized on reproduction of research, which is important, too).

Evidence of Absence

Suppose you think you’re 80% likely to have left your laptop power adapter somewhere inside a case with 4 otherwise-identical compartments. You check 3 compartments without finding your adapter. What’s the probability that the adapter is inside the remaining compartment?

Research Skills

How can I become a better researcher? I grouped my thoughts on this into the four themes: Robustness, Sense of Direction, Execution, and Collaboration.

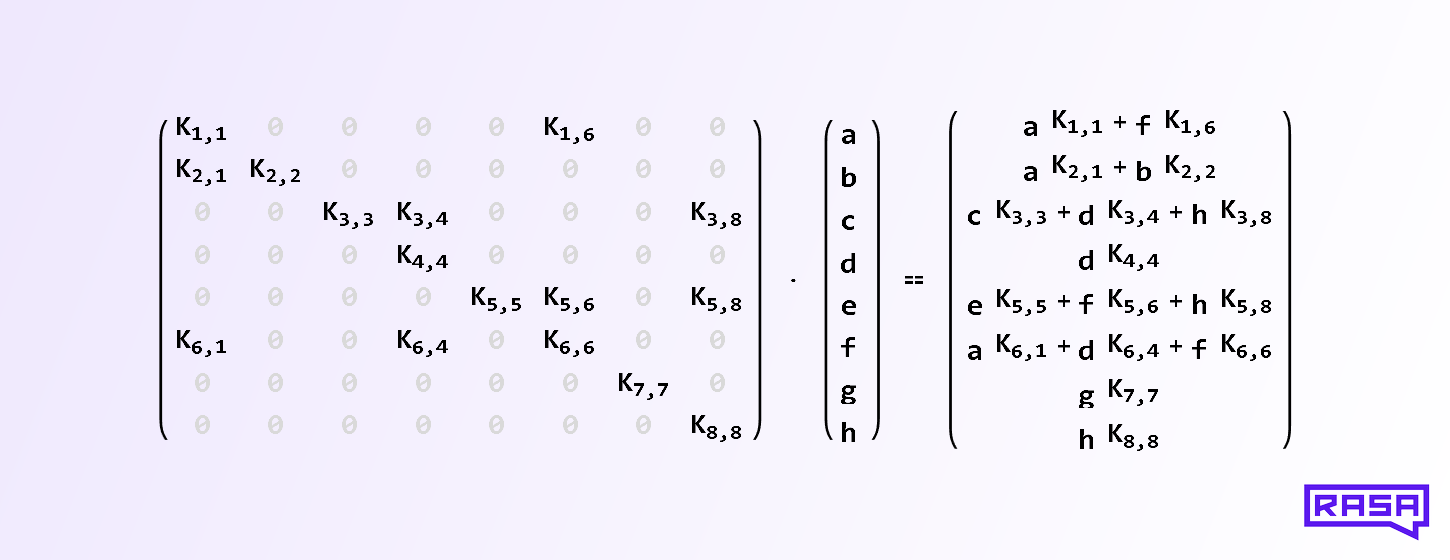

Why Rasa uses Sparse Layers in Transformers

Feed forward neural network layers are typically fully connected, or dense. But do we actually need to connect every input with every output? And if not, which inputs should we connect to which outputs? It turns out that in some of Rasa’s machine learning models we can randomly drop as much as 80% of all connections in feed forward layers throughout training and see their performance unaffected! Here we explore this in more detail.

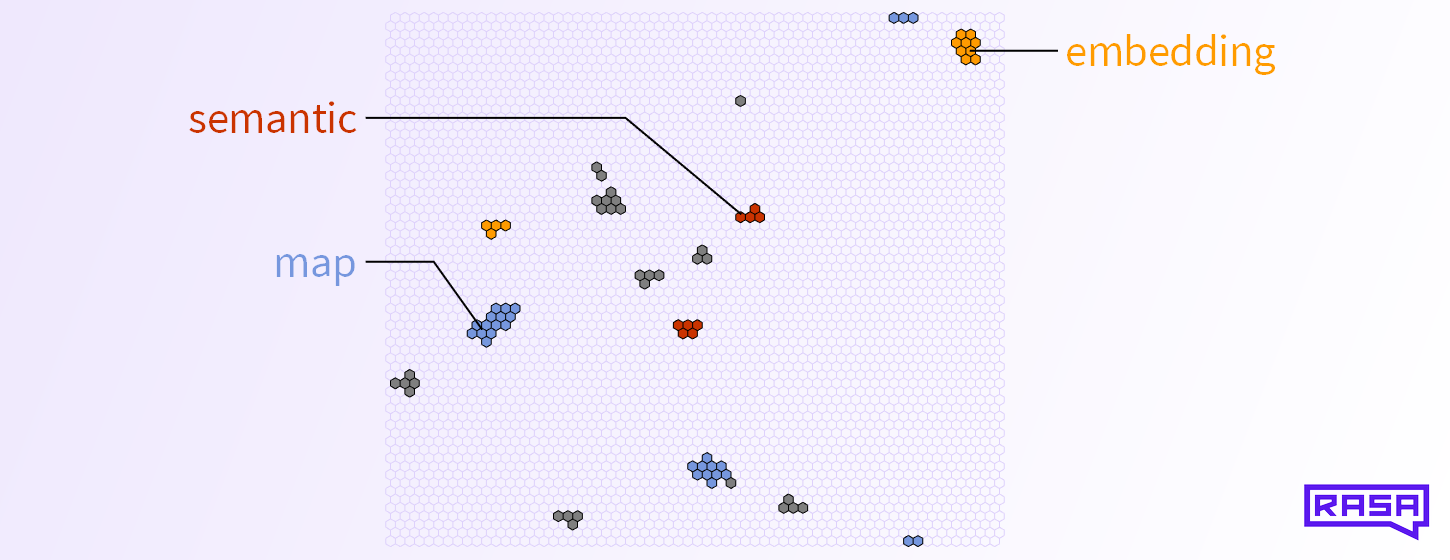

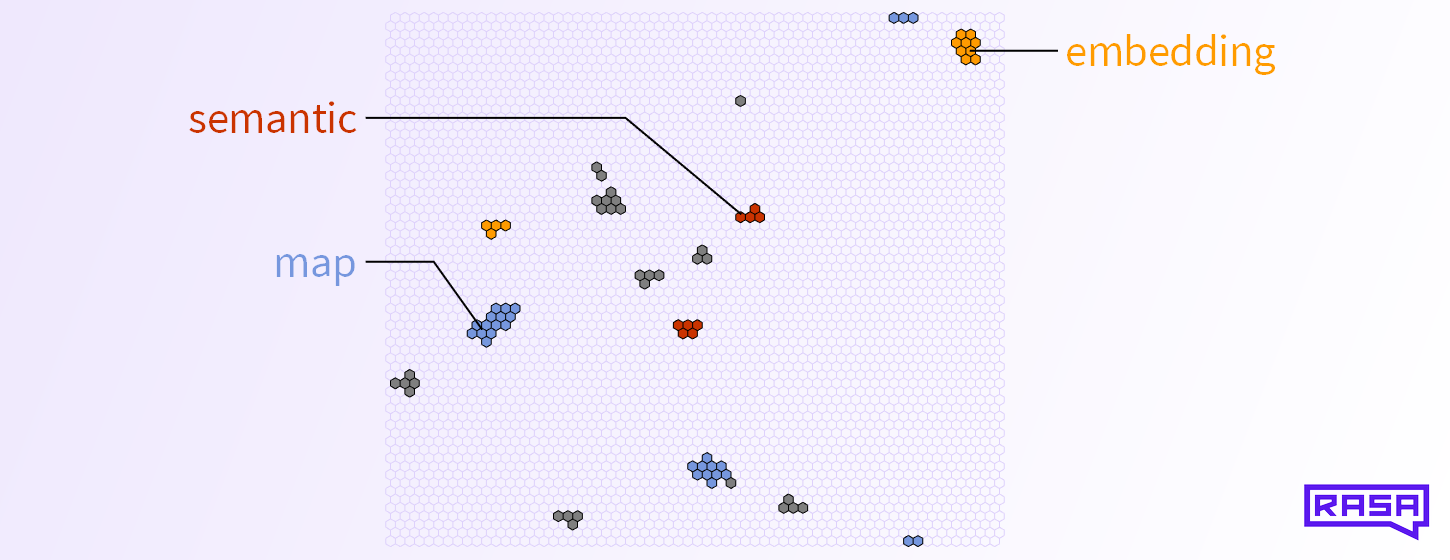

Semantic Map Embeddings – Part II

In Part I we introduced semantic map embeddings and their properties. Now it’s time to see how we create those embeddings in an unsupervised way and how they might improve your NLU pipeline.

Semantic Map Embeddings – Part I

How do you convey the “meaning” of a word to a computer? Nowadays, the default answer to this question is “use a word embedding”. A typical word embedding, such as GloVe or Word2Vec, represents a given word as a real vector of a few hundred dimensions. But vectors are not the only form of representation. Here we explore semantic map embeddings as an alternative that has some interesting properties. Semantic map embeddings are easy to visualize, allow you to semantically compare single words with entire documents, and they are sparse and therefore might yield some performance boost.

Corona Tests and the Bayes Factor

A medical test does not give you the probability that you have a certain disease (such as Corona). Instead, it is evidence, which moves the odds of you having the disease up or down. I wrote a little app that helps you calculate how these odds change.

First and Third Person View of Dialogue

Just a note for reference, because I find myself explaining this repeatedly.

Seven Scientists

Seven scientists (A, B, C, D, E, F, G) with widely-differing experimental skills measure a quantity m. You expect some of them to do accurate work, and some of them to turn in wildly inaccurate answers. What is m and how reliable is each scientist?

B. de Mesquita and Smith: The Dictator’s Handbook

For most of my life, I was not really interested in politics. I think, perhaps, this disinterest originated in my affinity for mechanistic and abstract theories that reflect the real world, and politics always seemed everything but mechanistic and abstract – just a huge mess of opinions and empty talk. I changed my view on […]

Douglas: The Reader’s Brain

Throughout my life in academia, I have received much advice on how I should and shouldn’t write. Countless books have been written on the topic, but The Reader’s Brain is special. Instead of just telling people what to do, Yellowlees Douglas actually explains why it is good to write one way or another, based on […]

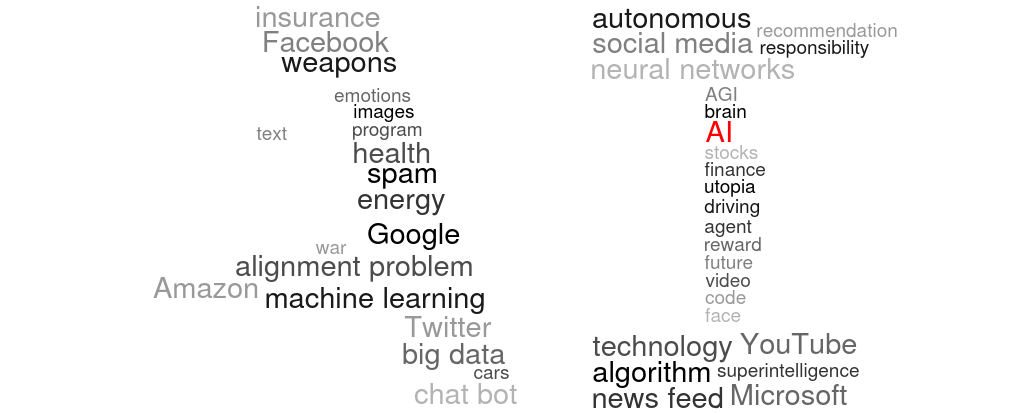

How AI may impact your life and how you can help making this impact a positive one

Buzzwords like artificial intelligence (AI), machine learning (ML), big data, and neural networks appear everywhere around us, but if you are not involved in the field, you may misunderstand them. Thus, here is a short overview on what these words mean, how the associated technologies can affect you personally, and what you can do to […]

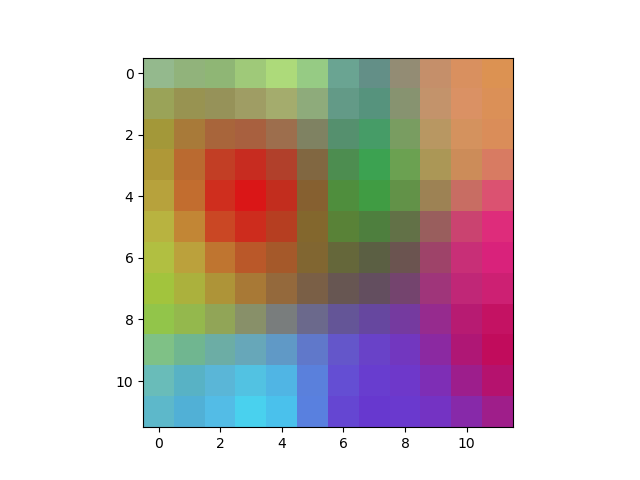

Self-Organizing Maps

Recently, I discovered this neat little algorithm called “self-organizing maps” that can be used to create a low-dimensional “map” (as in cartography) of high-dimensional data. The algorithm is very simple. Say you have a set of high-dimensional vectors and you want to represent them in an image, such that each vector is associated with a […]

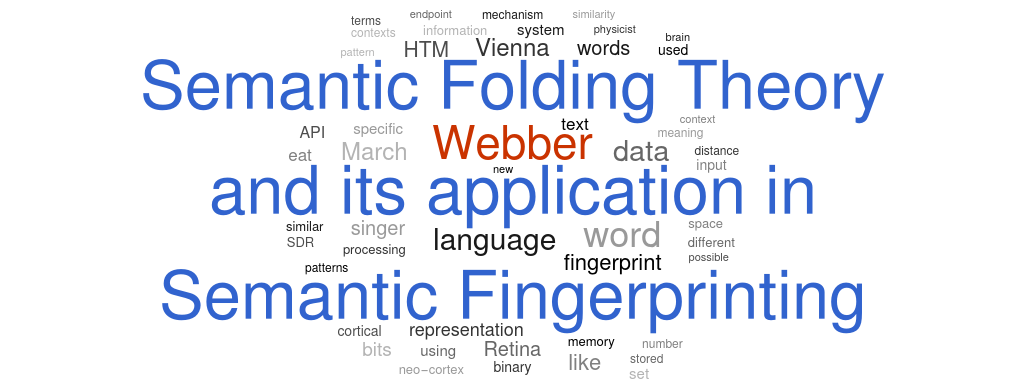

Representing concepts efficiently

This week’s post is about “Semantic Folding Theory and its Application in Semantic Fingerprinting” by Webber . The basic ideas were also discussed in this Braininspired podcast, and also presented and recorded at the HVB Forum in Munich. You don’t need any particular prior knowledge to understand this post. In my own words The space […]

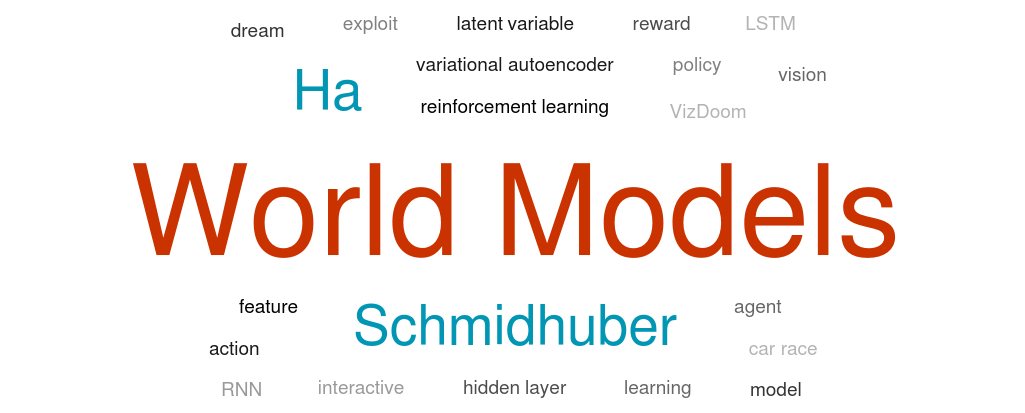

Predicting with world models

This week’s article is “World Models” by Ha and Schmidhuber . You can find a fancy interactive version of the article here. To understand this post, you need to have a basic understanding of neural networks, recurrent neural networks / LSTMs and reinforcement learning. In my own words Let’s say you want to train a […]

Do all Snickers bars taste the same?

Last year, I noticed that Snickers bars seem to taste different in different countries, but I was not sure. So my partner Nellissa and I conducted a little experiment that involved a lot of chocolate and a little Bayesian statistics. We wanted to establish whether Snickers bars from different countries taste different or not. To […]

West: Scale

Why do we die? Why does any animal die? How old can we expect an average individual of any species to become, and what does that have to do with its body size or heart rate? In “Scale”, Geoffrey West outlines a simple mathematical model that answers all of the questions above with amazing predictive […]

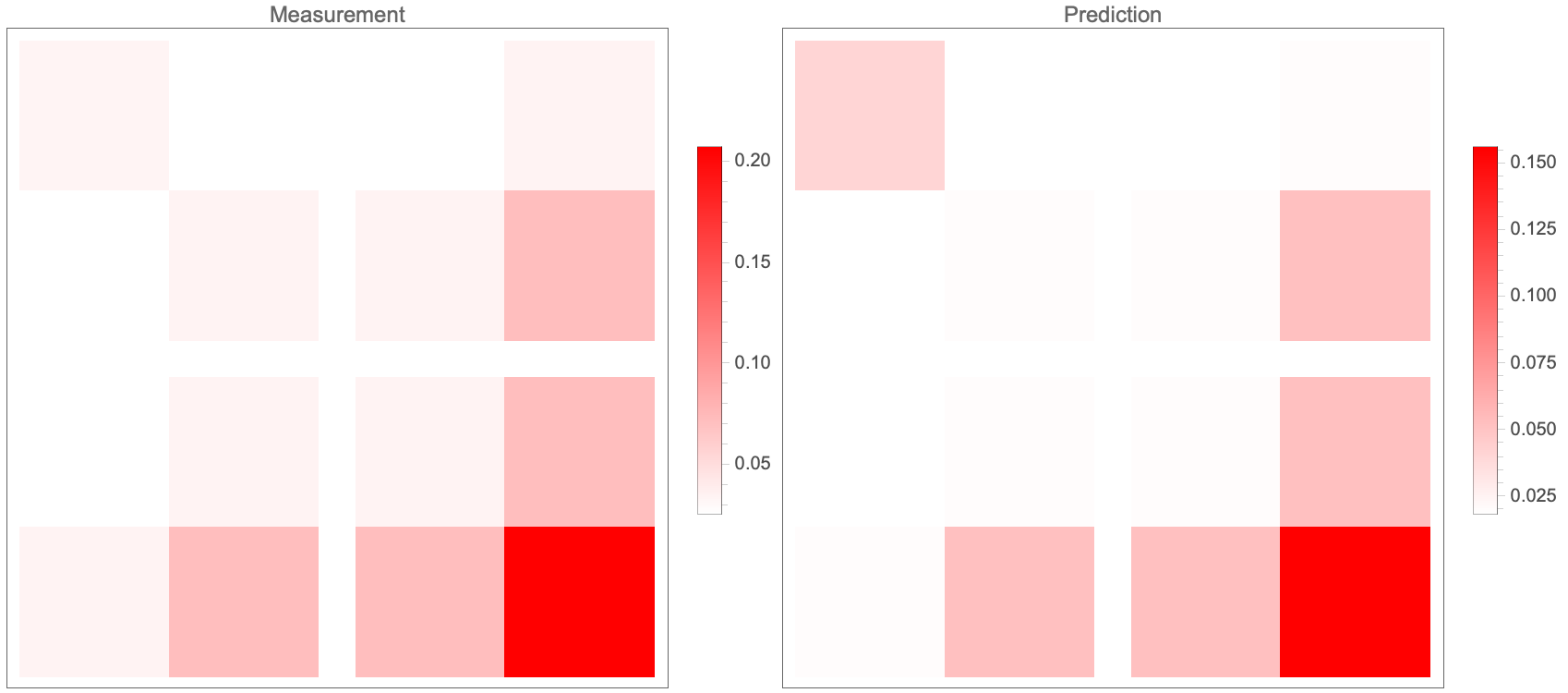

Conditional neural processes

This week’s article is “Conditional Neural Processes” by Garnelo et al. . To understand this post, you need to have a basic understanding of neural networks and Gaussian processes. In my own words A neural process (NP) is a novel framework for regression and classification tasks that combines the strengths of neural networks (NNs) and […]

Christian & Griffiths: Algorithms to live by

This month’s book is “Algorithms to live by”, by Brian Christian and Tom Griffiths. I dread reading my postal mail. Bills here, adverts there, and worst of all: forms to fill out. It feels like such a waste of time! Which is why I sometimes let letters stay in my inbox for several months. Reading […]

Gaussian processes

A Gaussian Process is a mathematical tool that you can use to model a probability distribution from data, i.e. to do regression, classification, and inference.

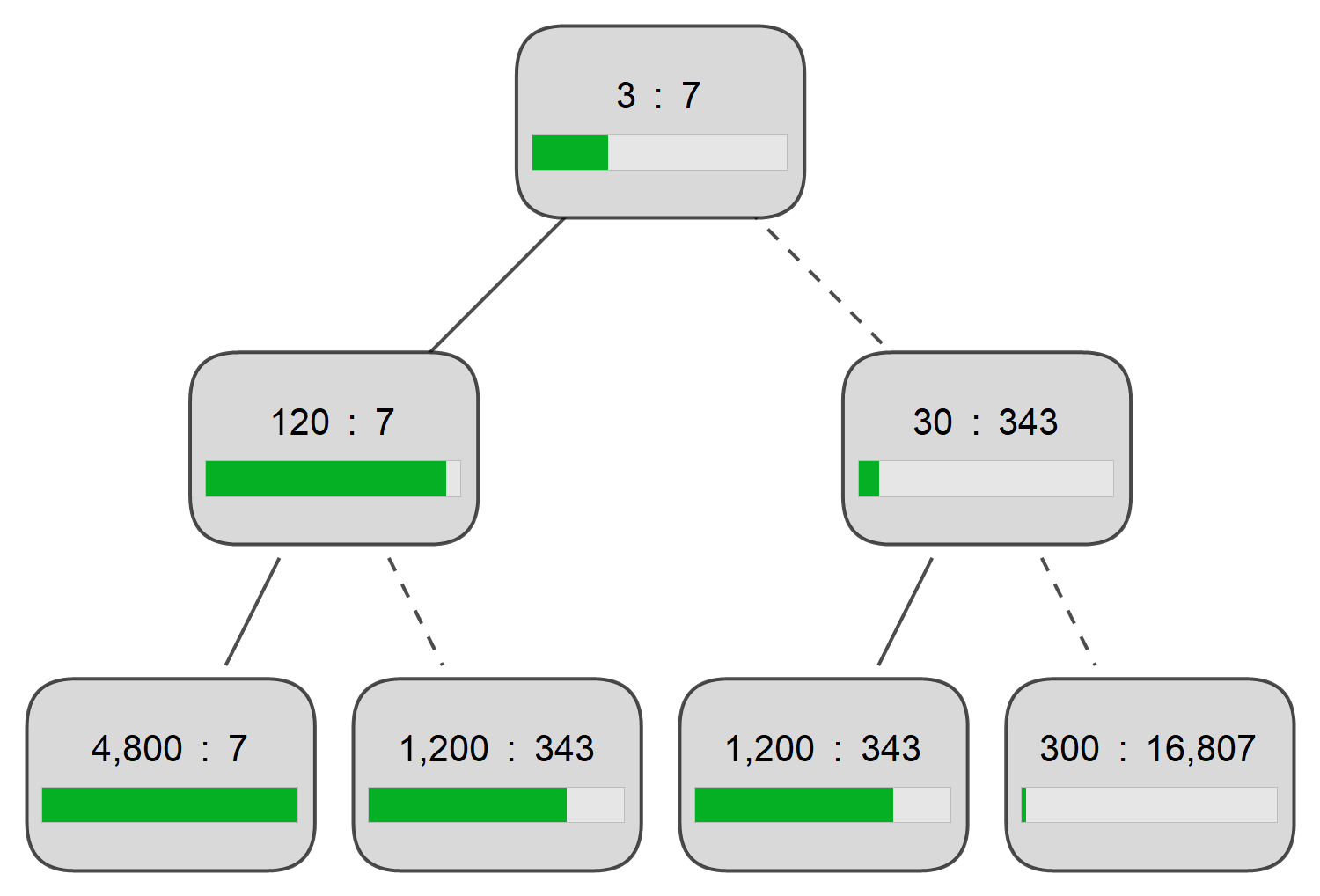

Reinforcement learning and Rubik’s Cube

This week’s article is “Solving the Rubik’s Cube Without Human Knowledge” by McAleer, Agostinelli, Shmakov, and Baldi , which was submitted to NeurIPS 2018. To understand this article, you need to have a basic understanding of neural networks and be familiar with reinforcement learning. In my own words The Rubik’s Cube is a 3-dimensional combination […]