Perceptron at Initialisation

In this notebook I study deep non-linear networks at initialisation. This is a prerequisite to understand such a network at the end of training, as is discussed in The Principles of Deep Learning Theory (PDLT) by Roberts, Yaida, and Hanin. This notebook also serves as a demonstration of my DeepLearningTheoryTools paclet for Mathematica and is meant to be read as complementary material to the PDLT book.

Paper reading as a Cargo Cult

Some thoughts on why paper reading groups aren’t helping with research (unless you’re specialized on reproduction of research, which is important, too).

Research Skills

How can I become a better researcher? I grouped my thoughts on this into the four themes: Robustness, Sense of Direction, Execution, and Collaboration.

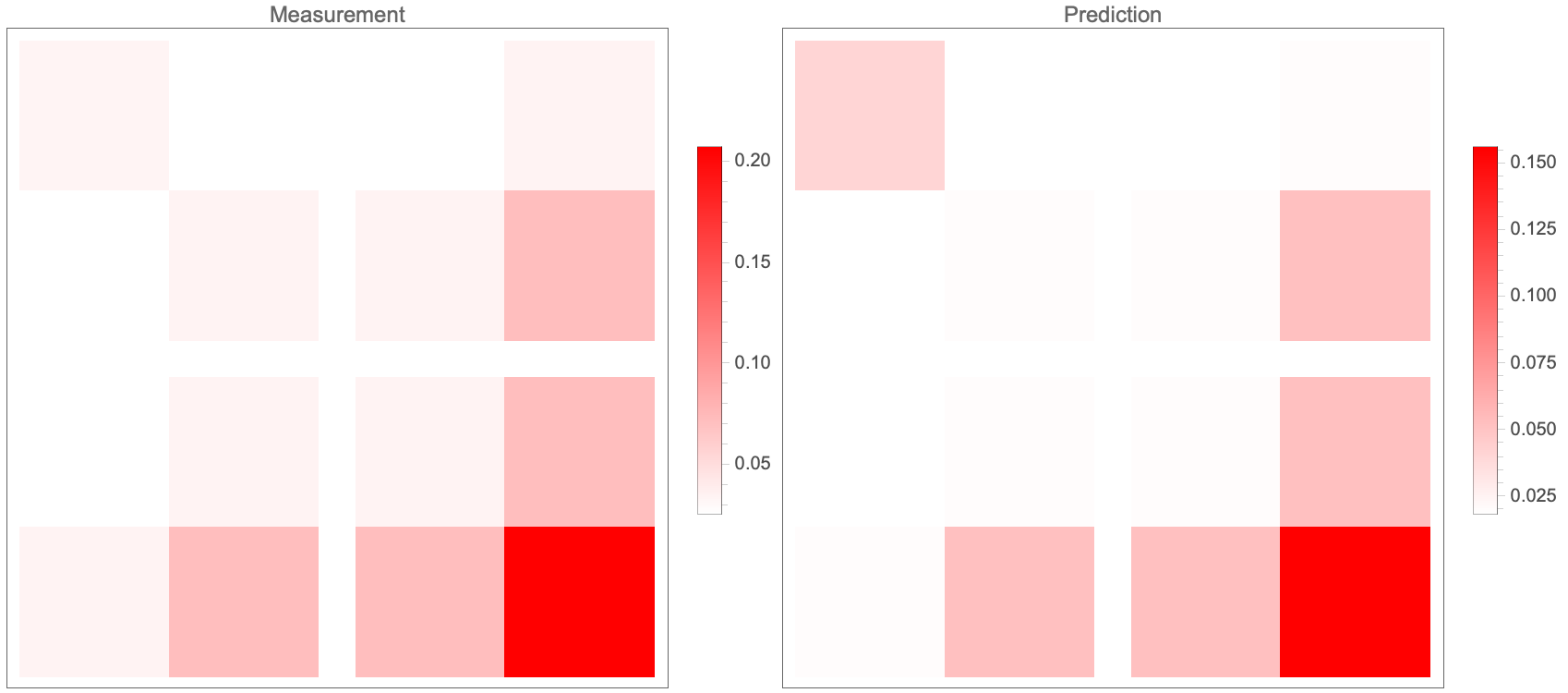

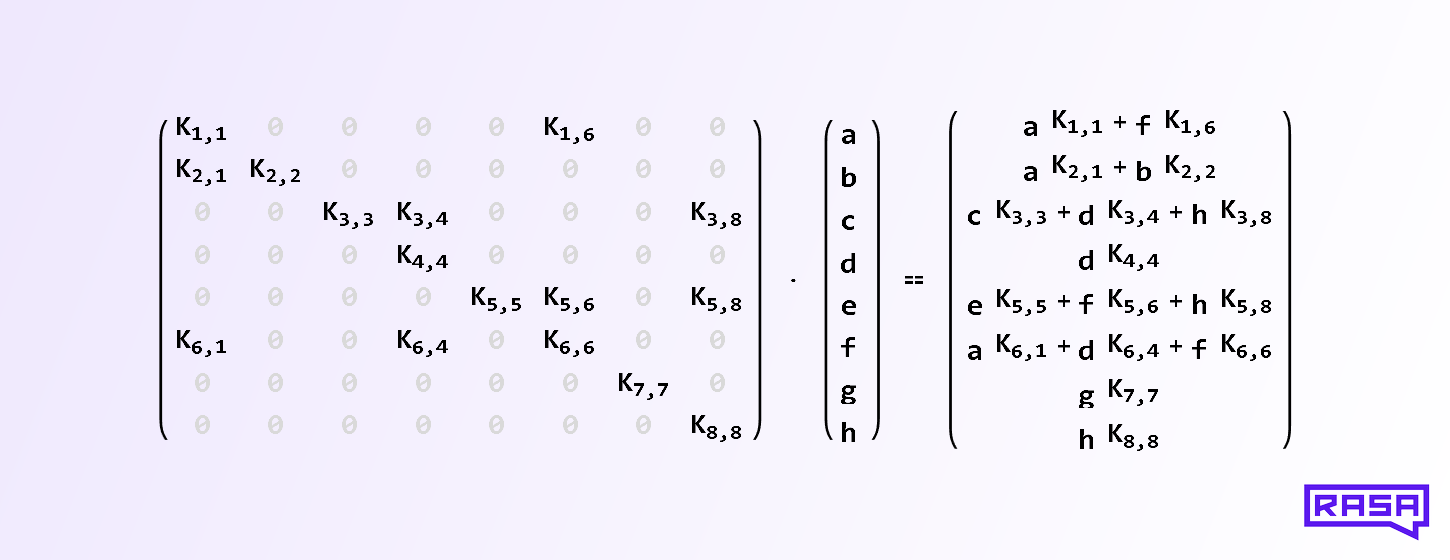

Why Rasa uses Sparse Layers in Transformers

Feed forward neural network layers are typically fully connected, or dense. But do we actually need to connect every input with every output? And if not, which inputs should we connect to which outputs? It turns out that in some of Rasa’s machine learning models we can randomly drop as much as 80% of all connections in feed forward layers throughout training and see their performance unaffected! Here we explore this in more detail.

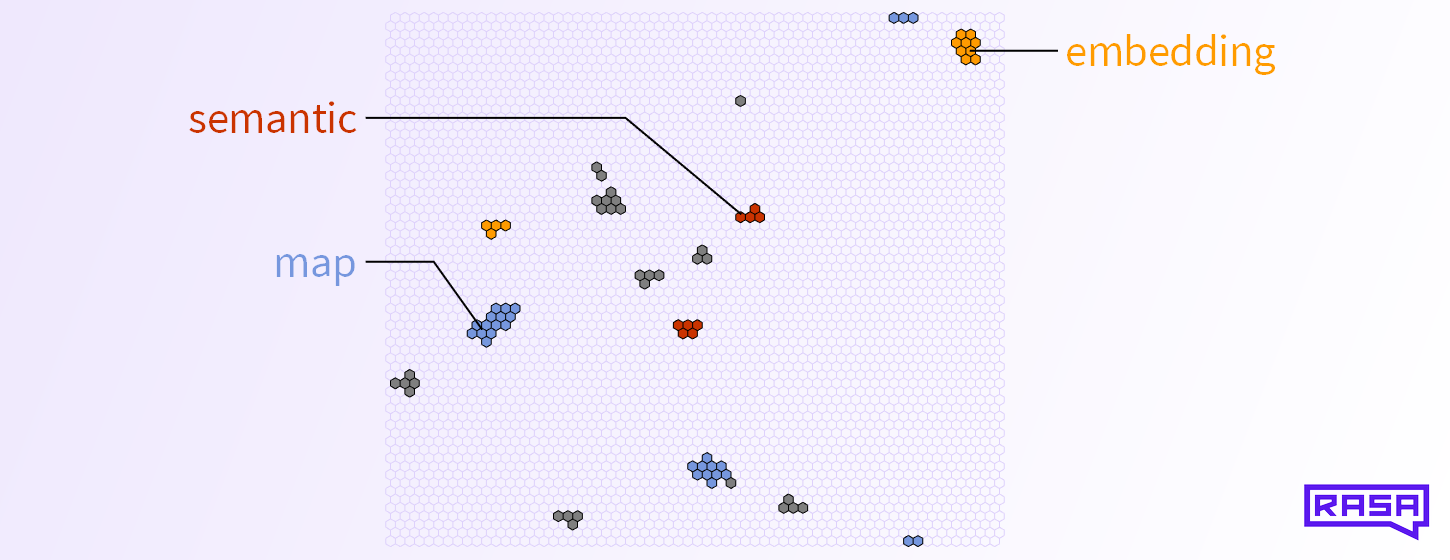

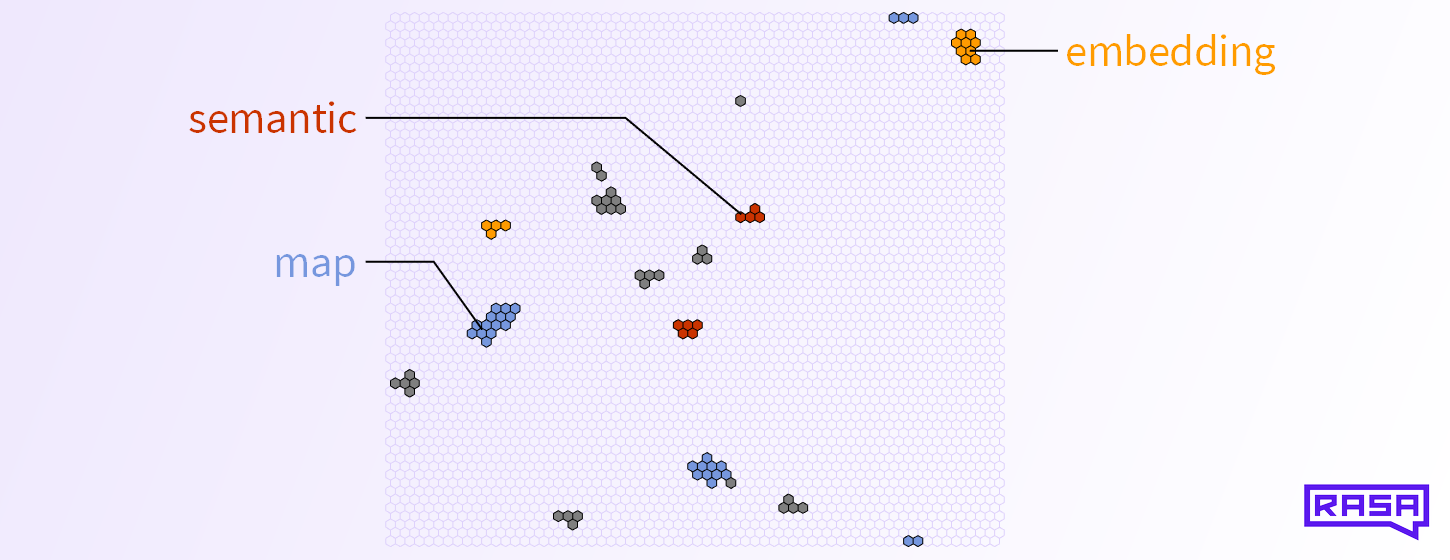

Semantic Map Embeddings – Part II

In Part I we introduced semantic map embeddings and their properties. Now it’s time to see how we create those embeddings in an unsupervised way and how they might improve your NLU pipeline.

Semantic Map Embeddings – Part I

How do you convey the “meaning” of a word to a computer? Nowadays, the default answer to this question is “use a word embedding”. A typical word embedding, such as GloVe or Word2Vec, represents a given word as a real vector of a few hundred dimensions. But vectors are not the only form of representation. Here we explore semantic map embeddings as an alternative that has some interesting properties. Semantic map embeddings are easy to visualize, allow you to semantically compare single words with entire documents, and they are sparse and therefore might yield some performance boost.