How AI may impact your life and how you can help making this impact a positive one

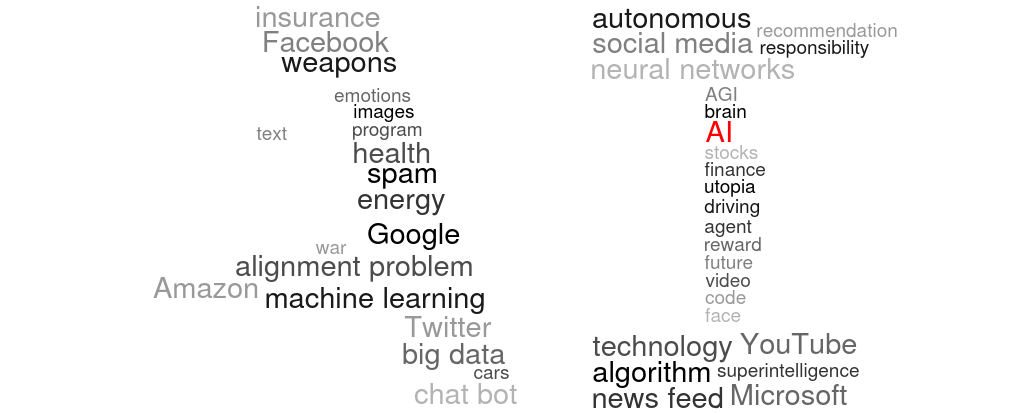

Buzzwords like artificial intelligence (AI), machine learning (ML), big data, and neural networks appear everywhere around us, but if you are not involved in the field, you may misunderstand them. Thus, here is a short overview on what these words mean, how the associated technologies can affect you personally, and what you can do to ensure that their impact is a positive one.

What just happened?

Since the 1990s, advancement in technology has drastically changed the way we go about our lives. Before then, there was no (notable) internet, and you had to press the “Turbo” button on your PC to get to 100 MHz calculation speed. I remember having to go to the payphone on the street if I wanted to call my friends.

Now most people carry around a pocket device that would have been considered a super-computer not too long ago – and you can call anyone across the world or download information on any topic in an instant.

This fundamental change in how we communicate and interact with the world enables companies such as internet service providers (ISPs), search engines (e.g. Google or Baidu), and social networks (e.g. Facebook) to collect huge amounts of data (hence “big data“).

Here, “data” refers to everything from emails and photos, to the way you move your mouse on the screen.

These data, together with improved hardware, is what enabled the ongoing machine learning revolution. Machine learning (ML) refers to a set of methods that enable computers to analyze data – even if the data are “fuzzy”, i.e. images, text or audio recordings.

Such fuzzy data were originally thought to be impossible for computers to understand. A computer was a calculation machine that was good at adding and multiplying numbers – not at recognizing a human face. But it turned out that the problem of recognizing a human face in an image can be formulated as a mathematical problem. Nowadays, ML techniques enable computers to solve it.

Many of these techniques (such as artificial neural networks) have been known for some time, but since they require strong computers and large datasets to train on, it was not feasible to use them until about 2006 .

Where are we now?

Presently, I suspect that the uses of ML with the greatest social impact are (i) captivating your attention, (ii) automating some tasks that would be simple but slow for humans to do (like cataloging images, improving search results, suggesting restaurants, news feeds and movies that you might be interested in), and (iii) financial and insurance-related applications.

To do these things, ML algorithms essentially learn to model and extract useful information from the data that they are given. The “quality” of these data is essential, however. For example, state of the art image classifiers (a particular ML algorithm) can distinguish a thousand different objects from images when trained on the ImageNet dataset. But developing a new, specific image classifier from scratch still takes months to do, because the existing classifiers do not generalize well and few training data sets are as well organized as ImageNet.

The best results are obtained when the training data can be generated. For example, in board games like Chess and Go, the state of the art ML algorithm Alpha Zero can beat the previous world champions, even though it learned these games just by playing against itself and learning from the moves it took (so the data were generated by the algorithm itself). But today’s ML techniques also have many problems.

For example, if you train an ML algorithm to recognize faces, but your data set only contains white people, then it will not recognize black people, or vice versa. The good news is that many people are working hard on correcting such biases, and progress is being made.

It is also very difficult to specify what we actually want, because ML systems lack common sense. In a set of ML techniques called reinforcement learning (RL), for example, you train an “agent” by rewarding it for good outcomes. So you may reward an RL agent for collecting points along the track in a boat racing video game, and of course you expect it to finish the game. Instead, it intentionally causes an accident that puts it into an infinite loop where the agent can collect rewards forever – without finishing the race.

For Facebook and similar platforms, ML algorithms create a model of the social group that you belong to. If you provide enough data (“likes”, comments, or just the information whom you are communicating with), these algorithms will be able to predict your personal preferences with high accuracy .

If the ML algorithm learns how likely you are going to react to a certain meme (statement or image), it also knows how to capture your attention. Hence, it can fill your news feed entirely with things that will make you stay there for longer. It is usually programmed to do so, because a longer engagement time makes you more likely to be influenced by the advertisements that appear in between the news.

Unfortunately, we are more inclined to engage with memes that make us angry than with memes that trigger other emotions or are emotionally neutral . More generally, the ML algorithms learn how to exploit our emotional weak points, even though they were not programmed to do so – they just find this strategy as a solution to their goal of maximizing human engagement with a website.

As the video by CGP Grey illustrates, this can be hugely detrimental for human relationships. However, for things like movie or restaurant suggestions, it is great if my phone can suggest things to me that I will actually enjoy.

Other common applications of ML are, for example, face recognition and automatic image enhancement of your camera, email spam filtering, automatic recognition of facial features used by Snapchat, voice and handwriting recognition, and forecasts of the stock market.

A new recent trend is the automatic generation of text, images, and even videos by ML algorithms. Once more, this has some good applications, but it also comes with issues that may soon become disruptive to our society. For example, it is already possible to automatically superimpose a persons face onto a video recording, so it looks like that person did things he/she never did. Imagine it would become cheap to generate a million photo-realistic videos of public figures doing things they have never actually done. This is already possible with voice generation, but with video we are not quite there yet, though I think it should be possible within the next decade.

On the positive side, ML algorithms can help reduce power consumption for server farms and other facilities, as well as help doctors with medical diagnoses and treatment selection.

As a side effect, yet of no less importance, we learn a great deal more about ourselves and how our brains work, as these questions overlap with AI research.

So machine learning is everywhere, and there are good, bad, and risky applications of one and the same technology.

What’s next? – Near term challenges and what you can do about them

The most pressing near term issue is probably that of lethal autonomous weapons (LAWs).

All parts of this technology already exist today, but as far as is publicly known, it is not militarized yet. If we manage to make LAWs as internationally stigmatized as chemical or biological weapons, we may have a chance to prevent fatal scenarios such as the one presented in the video above (or to the right, depending on your screen size).

You can find more information about what you can do personally to steer our civilization away from LAWs on this website: autonomousweapons.org, which is supported by thousands of AI researchers (including myself), and backed by the Future of Life Institute and other organizations.

Since ML techniques can only become better over time, we should also expect increasingly sophisticated scams and targeted attacks to make money or gain influence. Platforms like YouTube, Facebook, and Twitter are already in an ongoing arms race with bad actors that try to do just that.

It is a very hard problem, and ML will play a role on both sides of the battle.

The most important component in this battle is you. And thus, you are in the best place to do something about it. For example: (i) always think twice before you share a meme – even if you like it, it may not be true; (ii) socialize with people in the real world and practice critical thinking in day-to-day situations; (iii) Always remember that when you write a comment, there is a person on the other end reading this.

A more positive development is the current progress in autonomous driving. This technology – even if only applied to trucks and trains – has the potential to greatly reduce the number of traffic jams and accidents. This could save millions of lives, since more than a million people die in car accidents each year.

If you are a young truck driver, however, you may want to educate yourself about alternative jobs. The displacement and disappearance of jobs in general may be a central issue in the mid-term future .

It is unclear how long it will take until self-driving cars are available to the public. As I wrote earlier, ML algorithms have no common sense. This makes the autonomous driving problem so hard. But it is certainly solvable, and I think it would be a net positive development.

Through and through beneficial new technologies that may soon come out of machine learning are, for example, (i) improved accuracy in medical diagnoses, (ii) automatic optimization of energy distribution in computer clusters and wind farms, and (iii) more helpful digital personal assistants as well as speech or text based user interfaces.

We can also expect advances in medicine through indirect contributions of ML. For example, recently DeepMind created a novel algorithm that can solve the protein folding problem with high accuracy, which is essential for medical research.

Where does this go? – Long term problems and why you might care about them

You may have noticed that so far I have used the term machine learning (ML) exclusively, and did not write anything about artificial intelligence (AI). This is because these terms are not clearly defined, and AI is especially hard to pin down. Specifically, it seems like whenever a new AI algorithm solves a previously unsolvable problem (like face recognition), it is not considered AI anymore.

Today’s ML algorithms are good at specific tasks, and it is debatable if we can have them become generally intelligent without a major paradigm shift. Nevertheless, for artificial general intelligence (AGI) the question is not “if”, but “when” it will be created (barring prior destruction of our global society). Predictions about AGI timelines vary a lot, but mostly focus on the present century.

When talking about AGI, an algorithm’s competence is typically compared to that of a human. If we manage to write a computer program that is able to solve every cognitive task an average human can solve (from arithmetic to composing music and consoling a heartbroken friend), then we’d say that we have created AGI.

But there is no good reason to assume that it stops at the human level. There may be levels of intelligence that are vastly superior to ours. This is known as superintelligence . You could imagine a single superintelligent AGI as a whole civilization of Einsteins and Mozarts who all work together in perfect harmony and think 100 times faster than their human equivalents since they are not bound by the limitations of actual biological brains.

Before we program the first AGI, however, there is (at least) one critical issue to solve: the alignment problem.

It roughly goes like this: How do I ensure that a being that is vastly superior to me keeps doing what I would approve of? Even if it knows that what I want is stupid and I am incapable to see this?

Personally, I concur with Eliezer Yudkowsky’s perspective that we can be certain that if we build a superintelligence without solving the alignment problem first, then it will change the world in a way we do not approve of, and (by definition) it will be damned good at doing this.

The alignment problem is extremely hard, and we will likely need at least as long to solve it, as we need to develop AGI. Therefore, we have to start working on it right now. Research institutes like MIRI and the Future of Humanity Institute are committed to solving this problem, but considering its scope, there should be many more people working on this.

If you are not a mathematician or AI researcher, there is still something that you should think about: What society do we actually want our descendants to live in? What do we value? If you had the power of a superintelligence, what should you try to do? This is something that has to be decided by all of us, AI researcher or not. The Future of Life institute is in fact running a survey about this.

Final words

I imagine that you probably have one of two viewpoints, if you have never thought deeply about AI.

One viewpoint is that when you list the benefits and problems, you decide that the benefits are not worth the risk. So why do all that? Can’t we just stop here, or even downgrade to an earlier level of technology?

The answer is no, we cannot. First of all, the economy requires us to never stop innovating – it won’t work otherwise . And innovation also means developing ever smarter ML/AI algorithms. We might want to change how the economy works, of course, but this is beyond the scope of this article.

Second, the world has already changed and continues to do so. Things that worked in the past (e.g. combustion engines) often don’t work as a long-term solution (causes climate change and is dependent on limited resources). Today’s problems (climate change, organizing a global society, etc.) are more complicated than anything we have dealt with in the past, and solving them requires novel ideas and technologies.

The other viewpoint is that “everything will be alright”. This gut feeling that no matter what we do, no matter how bad things get, we’ll somehow get out of it and move on.

But the most important lesson that I have learned in my study of physics is that nature does not care about us. That is why it is so important that we care about each other.

Nature does not care if we live or die, if we are happy or if we suffer. It does not even care if we understand nature, or if we have a sense of purpose. Because nature is not a person. These things matter to us, however, and we need to get our act together to make this right.

This insight is particularly important when we consider the big picture. There is no grand plan. No guarantee that we are heading in a good direction, no safety-net that ensures that life continues irrespective of what we do. But there are also no constraints on how good the world could become, apart from the laws of physics.

It is up to us to figure out what direction is good for us, where we want to go, and how we go there.

Personally, I think we should aim to develop AI that helps us become the best people that we can be. Instead of suggesting enraging memes to us, it should help us understand each other better. Instead of learning to manipulate us for the sake of power and influence, it should learn to motivate us to reach excellence at the things we love to do. Once we become the best version of ourselves, we can decide where we want to go from there.