Why Rasa uses Sparse Layers in Transformers

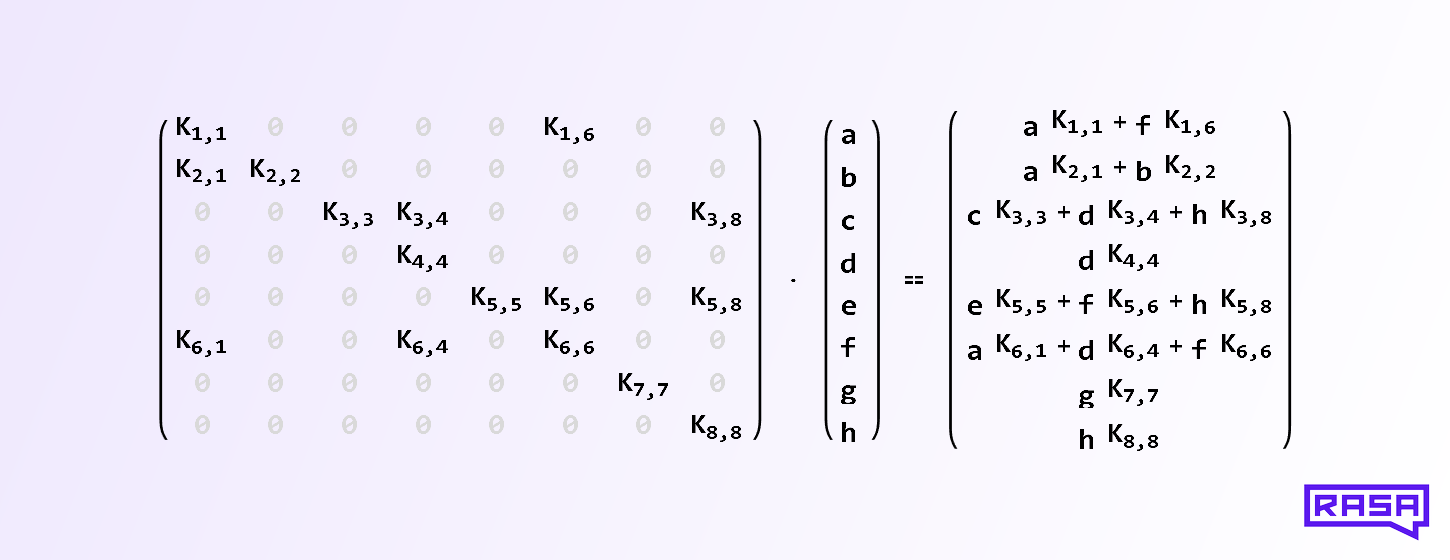

Feed forward neural network layers are typically fully connected, or dense. But do we actually need to connect every input with every output? And if not, which inputs should we connect to which outputs? It turns out that in some of Rasa’s machine learning models we can randomly drop as much as 80% of all connections in feed forward layers throughout training and see their performance unaffected! Here we explore this in more detail.

Semantic Map Embeddings – Part II

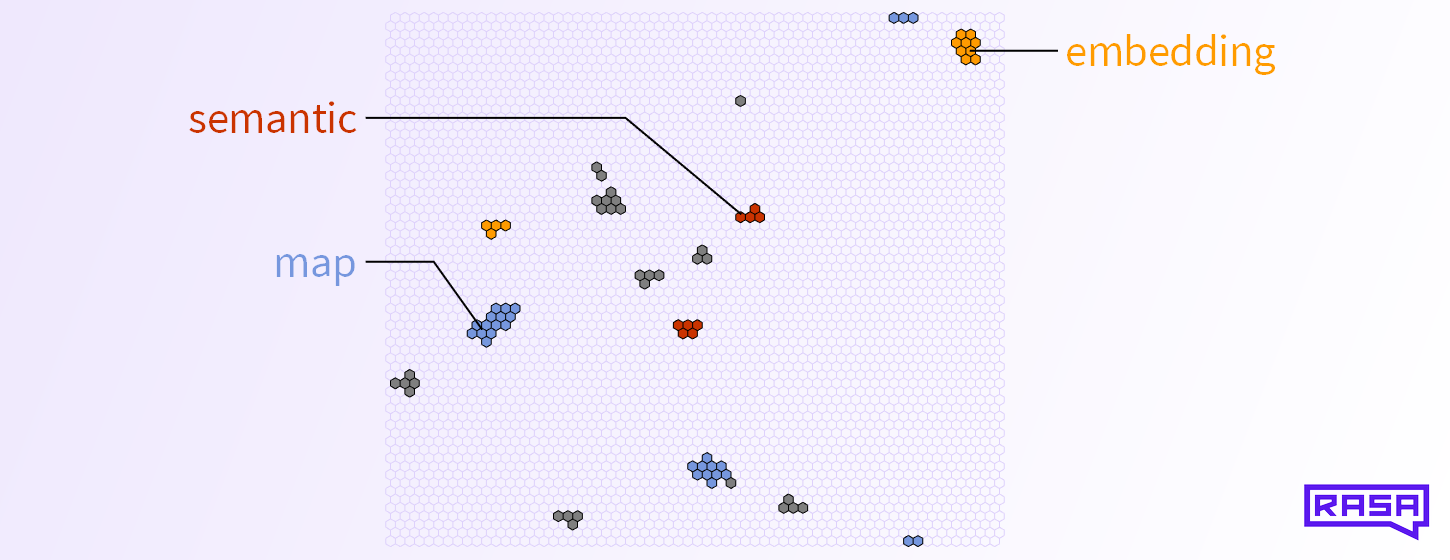

In Part I we introduced semantic map embeddings and their properties. Now it’s time to see how we create those embeddings in an unsupervised way and how they might improve your NLU pipeline.

Semantic Map Embeddings – Part I

How do you convey the “meaning” of a word to a computer? Nowadays, the default answer to this question is “use a word embedding”. A typical word embedding, such as GloVe or Word2Vec, represents a given word as a real vector of a few hundred dimensions. But vectors are not the only form of representation. Here we explore semantic map embeddings as an alternative that has some interesting properties. Semantic map embeddings are easy to visualize, allow you to semantically compare single words with entire documents, and they are sparse and therefore might yield some performance boost.

First and Third Person View of Dialogue

Just a note for reference, because I find myself explaining this repeatedly.

Douglas: The Reader’s Brain

Throughout my life in academia, I have received much advice on how I should and shouldn’t write. Countless books have been written on the topic, but The Reader’s Brain is special. Instead of just telling people what to do, Yellowlees Douglas actually explains why it is good to write one way or another, based on […]

Representing concepts efficiently

This week’s post is about “Semantic Folding Theory and its Application in Semantic Fingerprinting” by Webber . The basic ideas were also discussed in this Braininspired podcast, and also presented and recorded at the HVB Forum in Munich. You don’t need any particular prior knowledge to understand this post. In my own words The space […]