Semantic Map Embeddings – Part II

In Part I we introduced semantic map embeddings and their properties. Now it’s time to see how we create those embeddings in an unsupervised way and how they might improve your NLU pipeline.

Semantic Map Embeddings – Part I

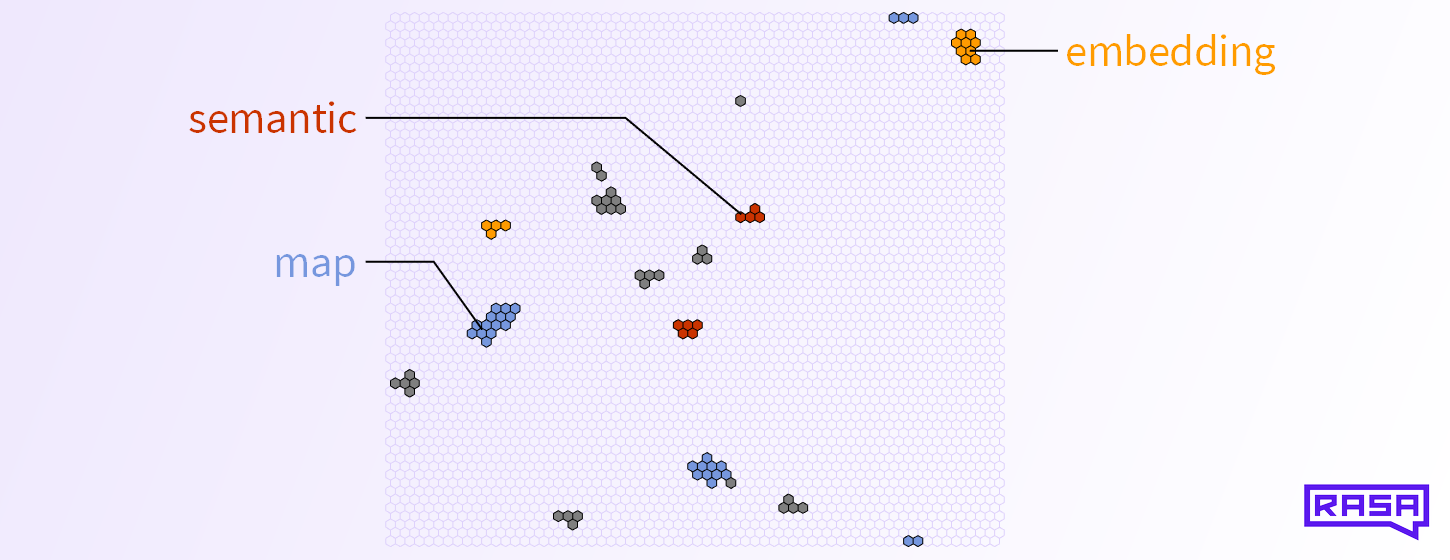

How do you convey the “meaning” of a word to a computer? Nowadays, the default answer to this question is “use a word embedding”. A typical word embedding, such as GloVe or Word2Vec, represents a given word as a real vector of a few hundred dimensions. But vectors are not the only form of representation. Here we explore semantic map embeddings as an alternative that has some interesting properties. Semantic map embeddings are easy to visualize, allow you to semantically compare single words with entire documents, and they are sparse and therefore might yield some performance boost.

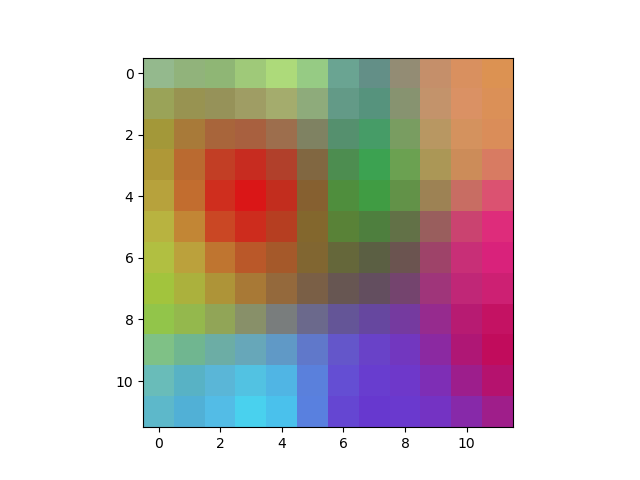

Self-Organizing Maps

Recently, I discovered this neat little algorithm called “self-organizing maps” that can be used to create a low-dimensional “map” (as in cartography) of high-dimensional data. The algorithm is very simple. Say you have a set of high-dimensional vectors and you want to represent them in an image, such that each vector is associated with a […]

Representing concepts efficiently

This week’s post is about “Semantic Folding Theory and its Application in Semantic Fingerprinting” by Webber . The basic ideas were also discussed in this Braininspired podcast, and also presented and recorded at the HVB Forum in Munich. You don’t need any particular prior knowledge to understand this post. In my own words The space […]